Customer Feedback Surveys

Collect feedback with beautiful surveys

Link it to user data, segment by behavior, demographics, and more

No credit card required

Build Surveys that look like your brand

Know which user gave

what feedback

Trusted by over 100+ Startups worldwide

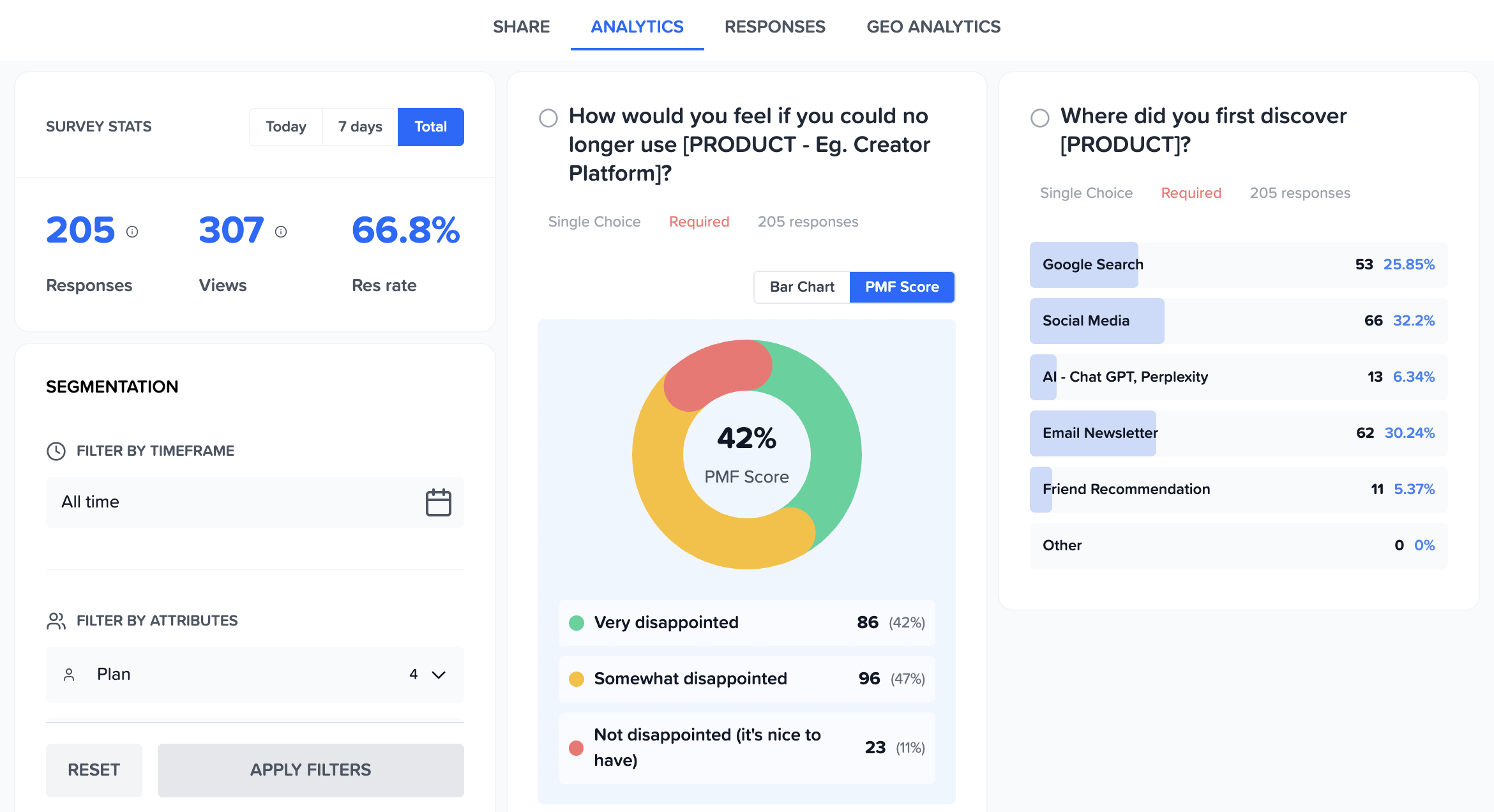

Powerful Analytics

Analytics that connect the dots for you

See trends, patterns, and priorities without digging through spreadsheets.

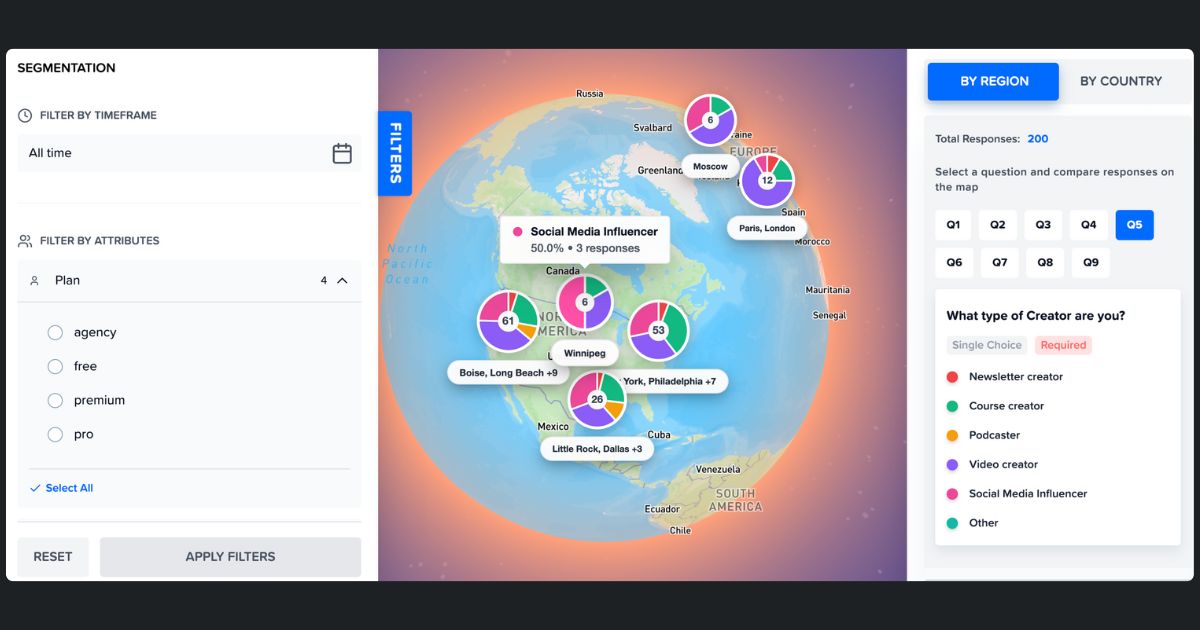

Geo Analytics

See Feedback on World Map

Your US customers love you. Your EU customers are frustrated. You'd never know from an average. Map feedback by region to spot geographic patterns instantly.

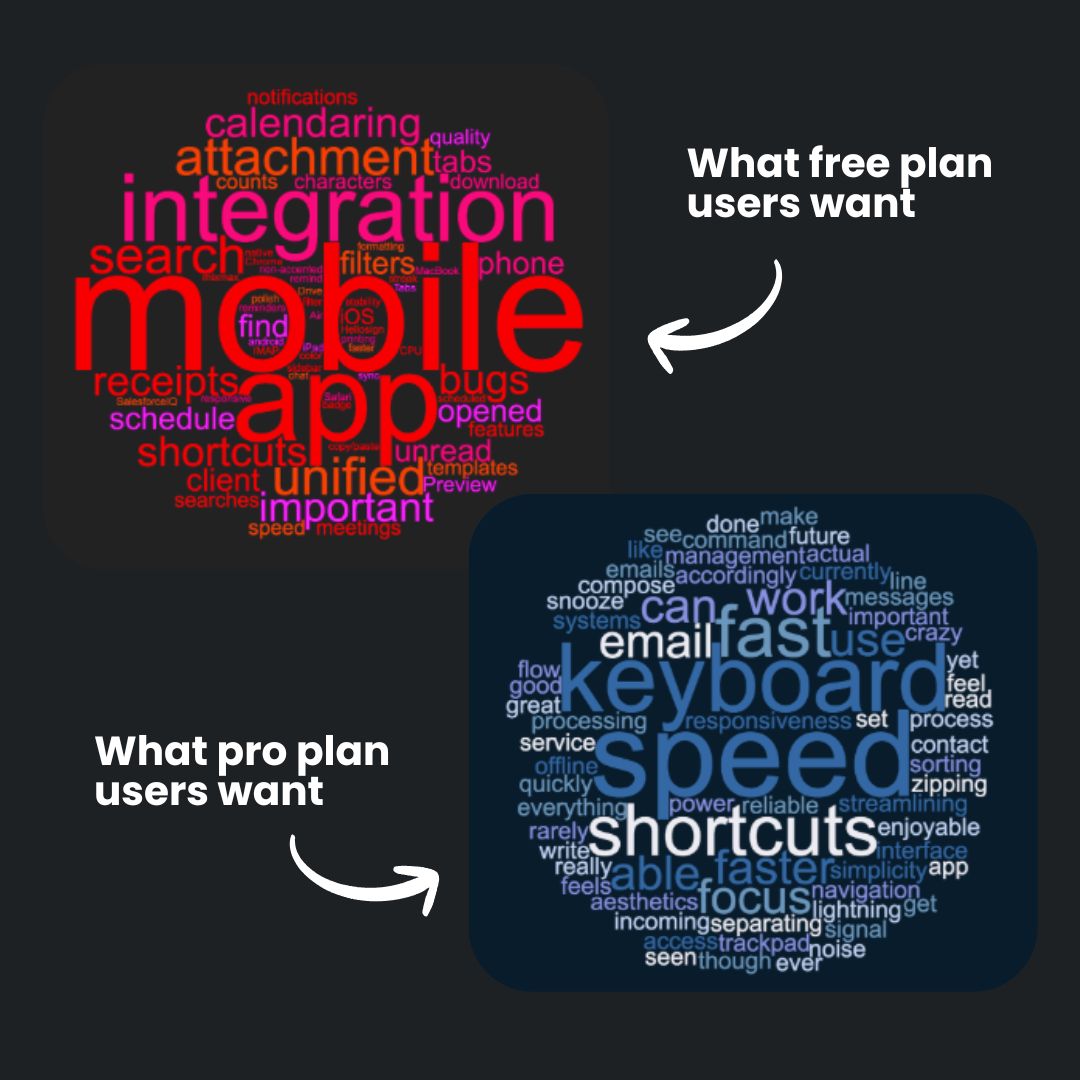

Analyze like a Pro

See Word Cloud from Open-Ended Feedback

See what each segment is actually saying. One word cloud per segment - so you know what matters to who.

- ✓Auto-generated from survey responses

- ✓Filter by segment to see different priorities

- ✓Spot patterns without reading every response

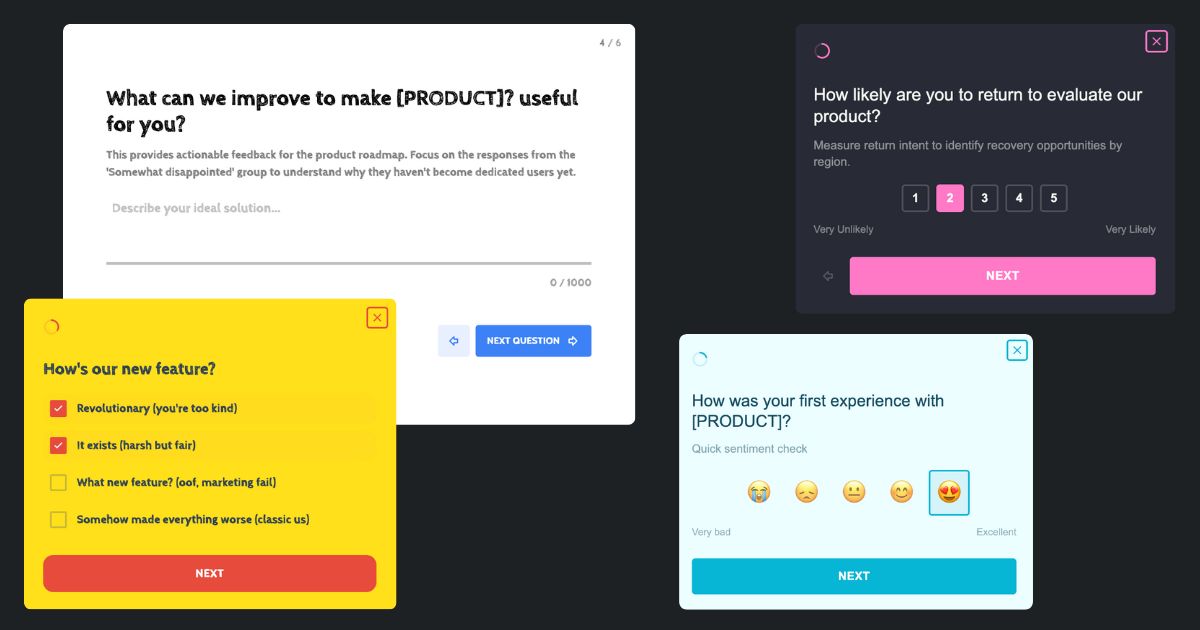

Build like a Pro

Surveys that look like your product

Match your surveys to your product. Custom colors, logo, and styling — so feedback collection feels native, not third-party.